With Adaptive Learning 3.0, The Instructor becomes the Learner

Personalized learning at scale? Check.

AI-powered, one-on-one coaching framework? Check.

Improved learning outcomes and more efficient learning processes? Check.

The learner-focused benefits of Adaptive Learning 3.0 are clear. But what if we told you that the advantages of this technology reach far beyond the learner? What if we said that Adaptive Learning 3.0 – with all its data and predictive insights – can actually help Instructional Designers learn more about their content, their learners and themselves than previously imagined. With Adaptive Learning 3.0, the instructor becomes the learner.

In fact, our Adaptive 3.0 learning platform gives Instructional Designers new tools to continually fine-tune courses – helping you learn what works and what doesn’t – and practice/grow your instructional design skills based on content efficacy data.

Learning What Works

Fulcrum’s Adaptive 3.0 learning platform leverages AI and machine learning to identify course patterns and provide deep insights into content efficacy. This means that you no longer have to rely completely on learner feedback – or even worse, employee’s on-the-job mistakes and consequences – to root out areas of content/course weakness. Rather, you can take advantage of user-friendly dashboards with content insights based on data to assess and refine courses with scalpel-like precision and then monitor the results.

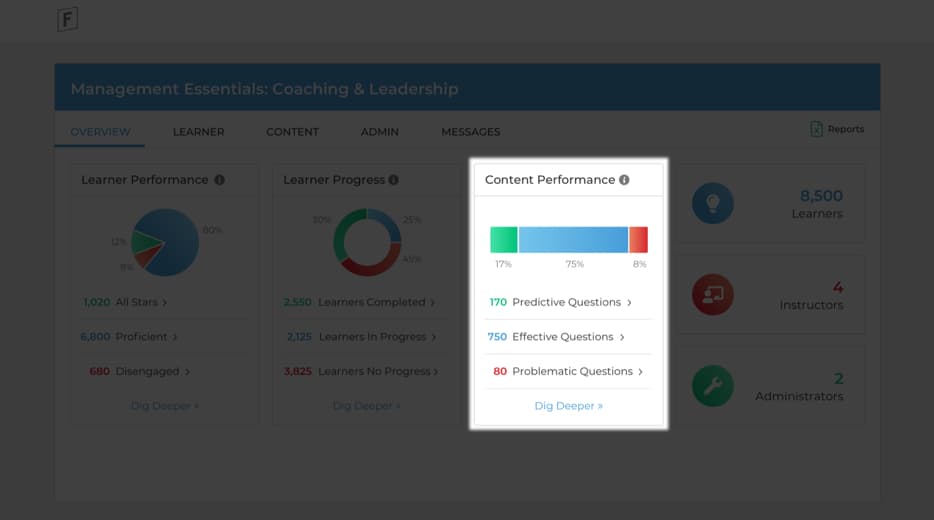

First, Fulcrum breaks content efficacy into three categories in its Content Performance tab of the administrator/instructor dashboard:

- Predictive Questions are those that indicate strong correlation of how a specific learner will perform in the course depending on whether they get it correct or incorrect. (In this case there are 170 Predictive Questions. These could also be used for other diagnostic uses, such as a quick retention check-in down the road.)

- Effective Questions are those that are performing exactly the way that you intended. (What you see here: 750 questions doing their thing in this example. And in this example, 170 Predictive + 750 Effective Questions = 920 good reasons to be confident in the efficacy of this course.)

- Problematic Questions are ones that learners, across the board, are having difficulty answering correctly. (In the course above, the system’s AI has pinpointed 80 opportunities to make this course stronger.)

Digging Deeper

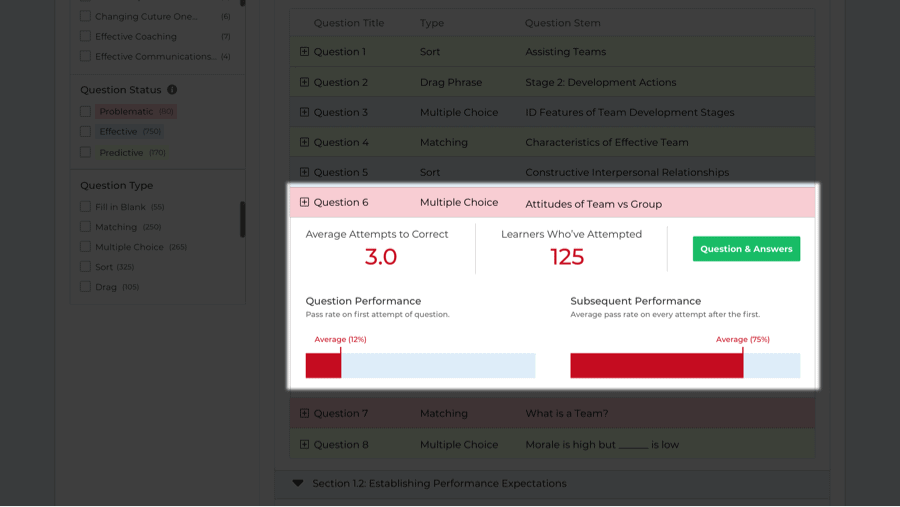

From here, you can “Dig Deeper.” Discover content that the system has identified and flagged as “Problematic” and why. In the dashboard screen below, we see, for example, that only 12% of learners got this question correct on the first attempt (which is very low in our Adaptive 3.0 system that is able to target Optimal Challenge).

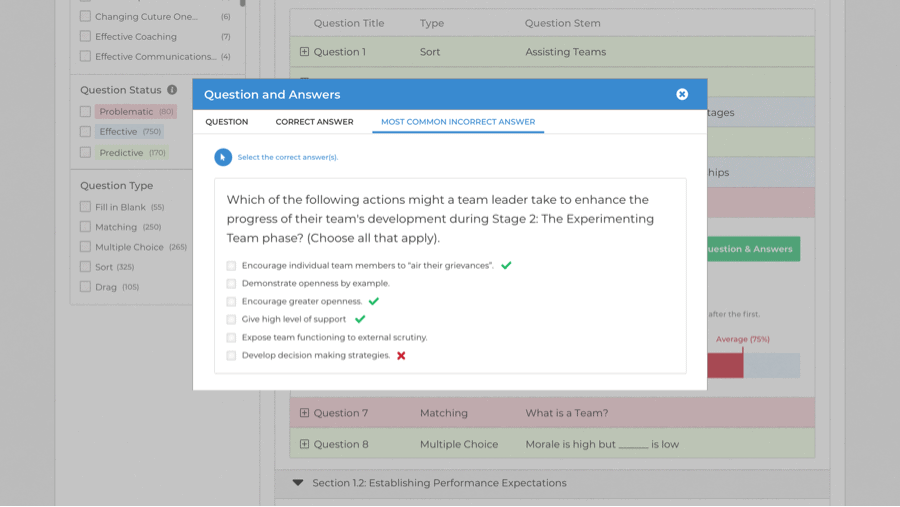

Then, you can quickly review the question, the correct answers and key data, such as the “ID favorite,” most common incorrect answer (see dashboard below).

In some cases, you’ll have an “ah-ha” moment, do a quick edit, publish the revised content to go live, and the system’s AI will begin tracking the performance of the revision. In other cases, after looking at the data, you’ll realize there’s a need for additional supporting content or replacing a question all together.

As you’d imagine, this fine-tuning makes the course perform better, improves the experience of the learner and improves buy-in for training as a whole.

Finding Areas for Practice and Growth

But this data doesn’t just help you improve course performance, learner experiences and your learning culture, it also helps you improve your instructional design skills and confidence levels. In fact, the feedback that the Fulcrum system provides allows you the opportunity to:

- Make edits and grow your proficiency in question writing and course design in a safe, non-judgmental space, with plenty of feedback along the way. For example, if course data reveals that it takes learners an exceptionally high number of attempts to answer a particular question type , you could use this data to refine these questions and practice your ability to write them more effectively.

- Gain a greater sense of confidence that your course works – that it’s meeting its stated performance goals. Instructional Designers tell us that they find this incredibly validating. Armed with “success data” they can actually see how all their efforts are paying off.

- Create a roadmap for future course changes – The ability to see performance by learning objective provides insight into topic areas where learners may be struggling or excelling. This information allows you to make decisions about whether or not content needs to be added to the course to provide more foundational material for learners. (Conversely, seeing a learning objective with only Predictive questions tells you that the learners are confident with the objective and perhaps less focus on those topics may be needed in the course.) All this intel helps you create a more effective roadmap for future content changes, additions and subtractions, so you can manage your resources accordingly.

It turns out that finding an opportunity to learn doesn’t have to be hard or time-consuming. In fact, for Instructional Designers using our platform, all it takes is a quick log in to the Fulcrum dashboard. And, remember, you’re never alone, not with our Client Success and Data Science teams; we have experience creating hundreds of hours of adaptive content and tens of thousands of adaptive assessments.

If you want to learn more about the content and development tools that can help maximize the efficacy and efficiency of your training course, let’s connect.